In zeroing in on a specific data set to begin with in my building-up-toward a more fully-conceived project for next Spring, I’ve found it necessary to first demarcate my chosen subject matter. To work backwards so to speak.

The prefix “hyper” refers to multiplicity, abundance, and heterogeneity. A hypertext is more than a written text, a hypermedium is more than a single medium. – Preface to HyperCities

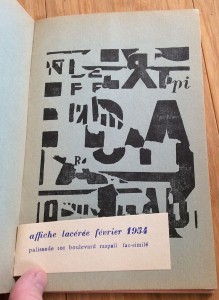

Hypergraphy, sometimes called Hypergraphics or metaGraphics : a method of mapping and graphic creation used in the mid-20th century by various Surrealist movements. The approach shares some similarities with Asemic writing, a wordless open semantic form of writing which means literally “having no specific semantic content.” Some forms of Caligraphy (think stylized Japanese ink brush work) also share a similar function, whereby the non-specificity leaves space for the reader to fill in, interpret, and deduce meaning. The viewer is suspended in a state somewhere between reading and looking. Traditionally, true Asemic writing only takes place when the creator of the asemic work can not read their own writing.

Example work:

https://en.wikipedia.org/wiki/Hypergraphy#/media/File:GrammeS_-_Ultra_Lettrist_hypergraphics.jpg

Jorge Luis Borges was an Argentine short-story writer, essayist, poet, translator, and librarian. A key figure in the Spanish language literature movement, he is sometimes thought of as one of the founders of magical realism. He notably went blind in 1950 before his death. In his blindness, he continued to dictate new works (mostly poetry) and give lectures. Themes in his work include books, imaginary libraries, the art of memory, the search for wisdom, mythological and metaphorical labyrinths, dreams, as well as the concepts of time and eternity. One of his stories, the “Library of Babel”, centers around a library containing every possible 410-page text. Another “The Garden of Forking Paths” presents the idea of forking paths through networks of time, none of which is the same, all of which are equal. Borges goes back to, time and again, the recurring image of “a labyrinth that folds back upon itself in infinite regression” so we “become aware of all the possible choices we might make.”[88]

The forking paths have branches to represent these choices that ultimately lead to different endings.

Borges is also know for the philosophical term the “Borgesian Conundrum”. From wikipedia:

The philosophical term “Borgesian conundrum” is named after him and has been defined as the ontological question of “whether the writer writes the story, or it writes him.”[89] The original concept put forward by Borges is in Kafka and His Precursors—after reviewing works that were written before Kafka’s, Borges wrote:

If I am not mistaken, the heterogeneous pieces I have enumerated resemble Kafka; if I am not mistaken, not all of them resemble each other. The second fact is the more significant. In each of these texts we find Kafka’s idiosyncrasy to a greater or lesser degree, but if Kafka had never written a line, we would not perceive this quality; in other words, it would not exist. The poem “Fears and Scruples” by Browning foretells Kafka’s work, but our reading of Kafka perceptibly sharpens and deflects our reading of the poem. Browning did not read it as we do now. In the critics’ vocabulary, the word ‘precursor’ is indispensable, but it should be cleansed of all connotation of polemics or rivalry. The fact is that every writer creates his own precursors. His work modifies our conception of the past, as it will modify the future.”

I’m circling around 2 or 3 different project ideas:

- Close Reading/Qualitative Analysis: Hypertextualizd Borges poems/short stories with an emphasis on works created during his period of blindness, re-imagined as a garden of forking paths. Break down the works into levels of constituent parts. Create an engine to re-esemble them based on a methodological algorithm informed by his ideas surrounding non-linearity, and the morphology of his oeuvre.

1.5 *Potential Visualization Component: Hyperagraphy Engine (simulated blindness) that interacts with the hypertextualized artifacts from 1.0.

- Distance Reading/Quantitative Analysis: Topics as “forms of discourse” in Borges and his precursors (Potential Candidates: Cervantes, Kafka, Schopenhauer, Quevedo, Gracian, Pascal, Coleridge, Poe.)

- …..(Running out of time, will continue this post tonight).

Taylor