For my data project (and perhaps leading into the final project), I’m interested in finding a way to map, graph, or visualize a set of linguistic / formal trends in contemporary poetry. Based on a set of inter-connected issues and ideas, I’ve arrived at the following question, which is probably still too large: what is the relationship between “appropriation,” race, and gender in poetry of the “avant-garde”?

In coming up with this question, my first idea was to use digital tools to see how certain words or trends in language have been appropriated (or repurposed) in poetry. How much is “creative” (i.e., original) and how much is “uncreative” (i.e., stolen), and where is the line between the two?

As a writer who has almost always used other texts to generate my own, I know the politics, practice, and implications of this question are complicated. It’s certainly not a question that can or should be cleanly “solved,” but perhaps that makes it fertile for a digital project. And a number of recent course readings (e.g.,” Topic Modeling and Figurative Language”), workshops (Web Scraping Social Media) and blog posts (Matt’s on “Poemage”; Taylor’s on “Hypergraphy”) have lead me to believe there are some tools I could explore to attempt this kind of “close”-and-“distant” reading.

But what do I mean by “language,” “appropriation,” and “contemporary poetry”? Which text(s) do I want to analyze, and how?

I thought of looking at poems in the most recent issue of Best American Poetry, if only because of the controversy surrounding a particular poem by a white man named Michael Derrick Hudson in that anthology. Hudson submitted his poem 40 times under his own name, and then 9 times under the pseudonym of Yi-Fen Chou, hoping that by posing as a Chinese woman (“appropriating” a particular name and identity), his poem would be accepted. And eventually, it was. According to Hudson, “it took quite a bit of effort to get (this poem) into print, but I’m nothing if not persistent.”

(The idea of “persistence” lead me to a (possibly) related question: how often do men, women, and POC submit the same piece of writing for publication, and how often are they published? Is this even a type of data that I could find?)

On a language level, an analysis of “appropriation” in a selection of texts could look something like this: take a set of poems (perhaps from that same issue of Best American Poetry), and use tools like Poemage or Topic Modeling to identify certain language trends: words, phrases, or perhaps bigger-picture patterns, like syntax, formal constraints, or rhyme. Then, scrape the web to see how and where these language and formal trends have been used prior to these poems: in literature, and / or in other places (blogs, social media, etc.). And if this dataset is too unmanageable, perhaps just look for how language from non-literary texts gets “appropriated” into poetic texts. This doesn’t relate to “appropriated” language to race and gender yet, but I’m getting there.

Another idea I had for the dataset (which I originally thought of as separate, but now seems related), was to use language-analysis to ask: what “is” (or marks) the “avant-garde” in poetry?

It’s hard, and perhaps silly, to try to define or locate a set of poems that are somehow representative of the “avant-garde,” which is itself a problematic term: most likely only historical, and not really in or of contemporary use. But the reason I thought of this question was my interest in an essay titled “Delusions of Whiteness in the Avant-Garde” by Cathy Park Hong, almost a year prior to the Michael Derrick Hudson case, in the journal Lana Turner – a journal “of poetry and opinion” in which I have published often, and which might also be thought of as a home for the “avant-garde.” In this essay, Hong claims that “to encounter the history of avant-garde poetry is to encounter a racist tradition.” A second dataset would then be in service of documenting and support this claim (and those that follow) through maps, graphs, or even hypertexts.

To do so, I might first take the poems in (lets say, that issue of Lana Turner), and use (lets say, “topic modeling”) to see if any words, syntax, or forms can be constituted into a pattern that might be used to define “the avant-garde” (or at least, the machine’s perception of it). To be more specific by borrowing some terms from Hong’s essay: what are the “radical languages and forms” that have been “usurped” (appropriated) without proper acknowledgement? What are “Eurocentric practices” in poetry these days? How can I use digital tools to further define these terms, and then map them against the race and gender of their authors? How does this information relate to the “persistence” with which (these poets) tend to submit their work? Is this part of the same dataset, or related?

The biggest issue with my proposal seems to be figuring out the scope of the project; how many and which texts to analyze. If I just use the most recent issues of Best American Poetry and / or Lana Turner, would I have enough, or too much data? And if I’m exploring these greater social issues, should I instead be mapping the controversies surrounding this discourse (on social media, for example), rather trying to analyze any particular text itself? How could I possibly choose texts that are representative of such large claims? One tentative thought I had was to analyze my own poems. This approach is appealing, not only because I’m most comfortable targeting myself, but also because it could offer the clearest dataset. That said, I hesitate to make this “critical” project about my own creative work, about which I may know or think too much, or at least, too much more than an algorithm or computer. And my poems are certainly not “representative” on their own.

That’s enough of this meandering post for now – – (I’m glad I can edit this) and welcome any thoughts or feedback – –

– – Sara

PS – and if this “dataset” proves too large or complicated with its various tools and politics (which I’m starting to think it very well might), another idea I have (not related!) is to analyze the language (again: words, syntax, and structural forms) that teachers (adjuncts and or full-time professors) use in their writing composition syllabi (lets say, within the CUNY network), as well as looking at the texts that they teach. I’m pretty sure that “official” student evaluations are made public (at CUNY, teachers need to agree to this) – but there is also the (problematic, but possibly useful) Rate My Professors, among other blogs and social media where student reactions might occur. It could be interesting to look at the relationship between how composition syllabi are written and how students perform and / or react. And I think this project could lead to the kind of “browsing” that Stephen Ramsey describes, where as my long, pervious proposal above might constitute too much of a “search,” and one that is overloaded, at that.

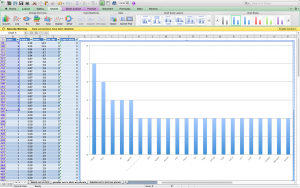

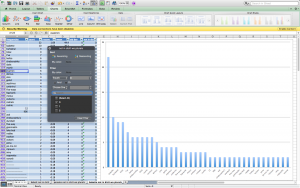

PARADES column graph (screen shot)

PARADES column graph (screen shot) BABETTE column graph (screen shot)

BABETTE column graph (screen shot)